Chapter 11 Inference for Regression

In our penultimate chapter, we’ll revisit regression models we first studied in Chapters 6 and 7. Armed with our knowledge of confidence intervals and hypothesis test from Chapters 9 and 10, we’ll be able to apply statistical inference to regression intercepts and slopes.

Needed packages

Let’s load all the packages needed for this chapter (this assumes you’ve already installed them). Recall from our discussion in Section 5.4.1 that loading the tidyverse package by running library(tidyverse) loads the following commonly used data science packages all at once:

ggplot2for data visualizationdplyrfor data wranglingtidyrfor converting data to “tidy” formatreadrfor importing spreadsheet data into R- As well as the more advanced

purrr,tibble,stringr, andforcatspackages

If needed, read Section 2.3 for information on how to install and load R packages.

library(tidyverse)

library(moderndive)

library(infer)11.1 Regression refresher

Before jumping into inference for regression, let’s remind ourselves of the University of Texas student evaluations analysis in Section 6.1.

11.1.1 Teaching evals analysis

Recall using simple linear regression we modeled the relationship between

- A numerical outcome variable \(y\), the instructor’s teaching score and

- A single numerical explanatory variable \(x\), the instructor’s beauty score.

We first created an evals_ch6 data frame that selected a subset of variables from the evals data frame included in the moderndive package. This evals_ch6 data frame contains only the variables of interest for our analysis, in particular the instructor’s teaching score and the beauty rating bty_avg:

evals_ch6 <- evals %>%

select(ID, score, bty_avg, age)

glimpse(evals_ch6)Observations: 463

Variables: 4

$ ID <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18…

$ score <dbl> 4.7, 4.1, 3.9, 4.8, 4.6, 4.3, 2.8, 4.1, 3.4, 4.5, 3.8, 4.5, 4…

$ bty_avg <dbl> 5.00, 5.00, 5.00, 5.00, 3.00, 3.00, 3.00, 3.33, 3.33, 3.17, 3…

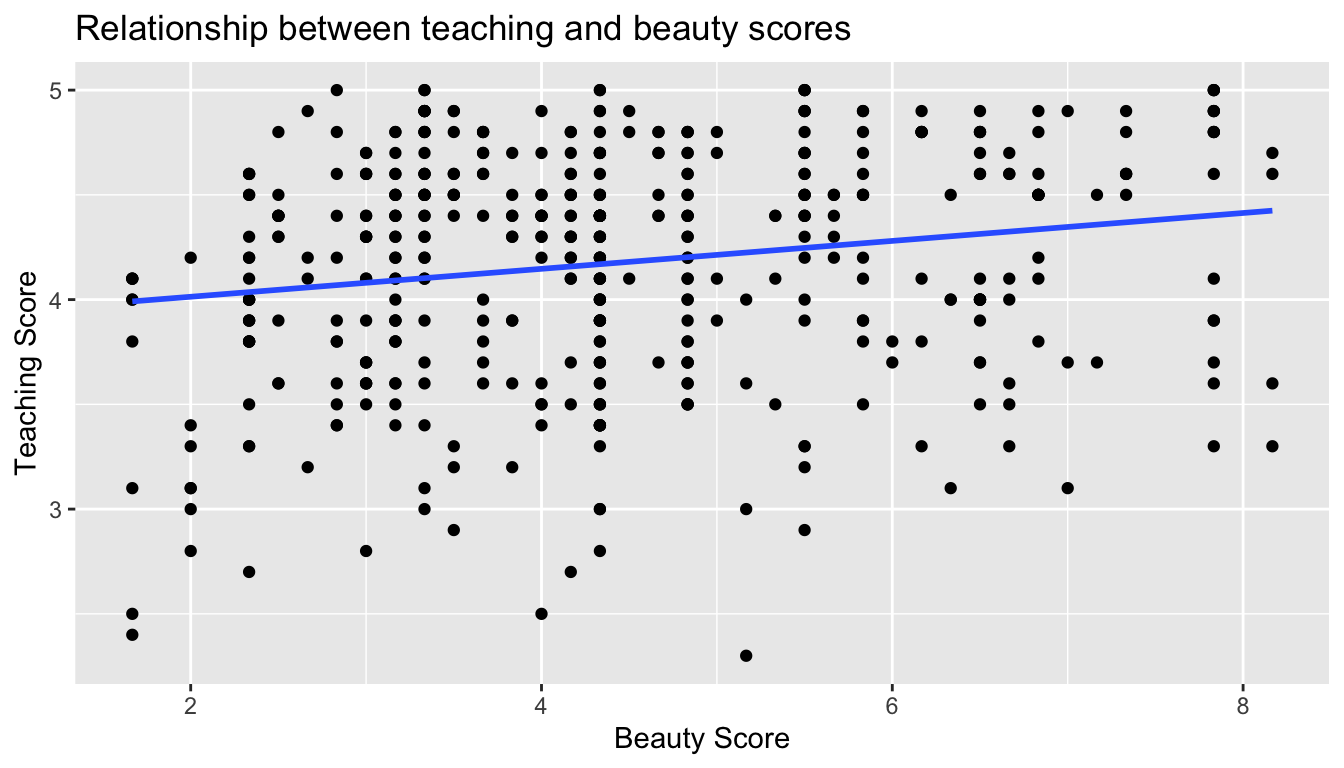

$ age <int> 36, 36, 36, 36, 59, 59, 59, 51, 51, 40, 40, 40, 40, 40, 40, 4…In Section 6.1.1, we performed an exploratory data analysis of the relationship between these two variables. We saw there that there was a weakly positive correlation of 0.187 between the two variables. This was evidenced in Figure 11.1 of the scatterplot along with the “best fitting” regression line that summarizes the linear relationship between the two variables.

ggplot(evals_ch6, aes(x = bty_avg, y = score)) +

geom_point() +

labs(x = "Beauty Score", y = "Teaching Score",

title = "Relationship between teaching and beauty scores") +

geom_smooth(method = "lm", se = FALSE)

FIGURE 11.1: Relationship with regression line.

Looking at this plot again, you might be asking “But is that line really that positive in its slope?” It does increase from left to right as the bty_avg variable increases, but by how much? To get to this information, we used the lm() function to fit a regression model, which took a formula y ~ x = score ~ bty_avg as an input.

- We first “fit” the linear regression model using the

lm()function and save it inscore_model. - We get the regression table by applying the

get_regression_table()from themoderndivepackage toscore_model.

# Fit regression model:

score_model <- lm(score ~ bty_avg, data = evals_ch6)

# Get regression table:

get_regression_table(score_model)| term | estimate | std_error | statistic | p_value | lower_ci | upper_ci |

|---|---|---|---|---|---|---|

| intercept | 3.880 | 0.076 | 50.96 | 0 | 3.731 | 4.030 |

| bty_avg | 0.067 | 0.016 | 4.09 | 0 | 0.035 | 0.099 |

Using the values in the estimate column of the regression table in Table 11.1, we could then obtain the equation of the “best-fitting” regression line in blue in Figure 11.1 where \(b_0\) is the fitted intercept and \(b_1\) is the fitted slope for bty_avg.

\[ \begin{aligned} \widehat{y} &= b_0 + b_1 \cdot x\\ \widehat{\text{score}} &= b_0 + b_{\text{bty}\_\text{avg}} \cdot\text{bty}\_\text{avg}\\ &= 3.880 + 0.067\cdot\text{bty}\_\text{avg} \end{aligned} \]

Recall the interpretation of the \(b_1\) = 0.067 value of the fitted slope:

For every increase of one unit in beauty rating, there is an associated increase, on average, of 0.067 units of evaluation score.

Thus, the slope value quantifies the relationship between the y variable of score and the x variable of bty_avg. We also discussed the intercept value of \(b_0\) = 3.88 and its lack of practical interpretation since the range of possible beauty scores does not include 0.

11.1.2 Sampling scenario

Now let’s view the instructors for these 463 courses in as a representative sample from a greater population as we defined in Section 8.3.1. Perhaps these instructors can be viewed as a representative sample of all instructors in the University of Texas system, not just UT Austin? Or perhaps all Texas university and college level instructors? Or all instructors in the United States? For our purposes, since these data were collected between 2000-2002, we’ll view the evals_ch6 as a representative sample of courses between 1995 and 2002.

Since we are viewing these \(n\) = 463 courses as a sample, we can view our fitted slope \(b_1\) = 0.067 as a point estimate of a population slope \(\beta_1\), in other words the slope quantifying the relationship between teaching score and beauty average bty_avg for all instructors in our population. Similarly, we can view our fitted intercept \(b_0\) = 3.88 as a point estimate of a population intercept \(\beta_0\) for all instructors in our population. Putting these two together, we can view the equation of the fitted line \(\widehat{y}\) = \(b_0 + b_1 \cdot x\) = \(3.880 + 0.067 \cdot \text{bty}\_\text{avg}\) as an estimate of some true and unknown population line \(y = \beta_0 + \beta_1 \cdot x\).

Thus we can draw parallels between our teaching evals analysis and all our previously seen sampling scenarios. In this chapter, we’ll focus on the final two scenarios of Table 8.8: regression slopes and regression intercepts.

| Scenario | Population parameter | Notation | Point estimate | Notation. |

|---|---|---|---|---|

| 1 | Population proportion | \(p\) | Sample proportion | \(\widehat{p}\) |

| 2 | Population mean | \(\mu\) | Sample mean | \(\overline{x}\) or \(\widehat{\mu}\) |

| 3 | Difference in population proportions | \(p_1 - p_2\) | Difference in sample proportions | \(\widehat{p}_1 - \widehat{p}_2\) |

| 4 | Difference in population means | \(\mu_1 - \mu_2\) | Difference in sample means | \(\overline{x}_1 - \overline{x}_2\) |

| 5 | Population regression slope | \(\beta_1\) | Fitted regression slope | \(b_1\) or \(\widehat{\beta}_1\) |

| 6 | Population regression intercept | \(\beta_0\) | Fitted regression intercept | \(b_0\) or \(\widehat{\beta}_0\) |

Since we are now viewing our fitted slope \(b_1\) and fitted intercept \(b_0\) as estimates based on a sample, these estimates will be subject to sampling variability as we’ve seen numerous times throughout this book. In other words, if we collected new sample of data on a different set of \(n\) = 463 courses and their instructors, the new fitted slope \(b_1\) will very likely differ from 0.067. The same goes for the new fitted intercept \(b_0\).

But by how much will they differ? In other words, by how much will these estimates vary? This information is contained in remaining columns in Table 11.1. Our knowledge about sampling from Chapter 8, confidence intervals from Chapter 9, and hypothesis tests from Chapter 10 will help us interpret these remaining columns.

11.2 Interpreting regression tables

Both in Chapter 6 and in our regression refresher earlier, we focused only on the two left-most columns the regression table in Table 11.1: term and estimate. Let’s now shift to focus on the remaining columns of std_error, statistic, p_value, and the two columns related to confidence intervals lower_ci and upper_ci that were not discussed previously. Given the lack of practical interpretation for the fitted intercept \(b_0\), we’ll focus only on the second row of the table corresponding to the fitted slope \(b_1\).

| term | estimate | std_error | statistic | p_value | lower_ci | upper_ci |

|---|---|---|---|---|---|---|

| intercept | 3.880 | 0.076 | 50.96 | 0 | 3.731 | 4.030 |

| bty_avg | 0.067 | 0.016 | 4.09 | 0 | 0.035 | 0.099 |

11.2.1 Standard error

The third column of the regression table in Table 11.1 std_error corresponds to the standard error of our estimates. Recall the definition of standard error we saw in Section 8.3.2:

The standard error is the standard deviation of any point estimate from a sampling scenario.

So what does this mean in terms of the fitted slope \(b_1\) = 0.067? This value is just one possible value of the fitted slope resulting from this particular sample of \(n\) = 463 pairs of score and bty_avg. However, if we collected a different sample of \(n\) = 463 pairs of bty_avg and score, we will almost certainly obtain a different fitted slope \(b_1\). This is due to sampling variability, as we studied in Chapter 8.

Say we hypothetically collected 1000 such samples and plotted the histogram of the 1000 resulting values of the fitted slope \(b_1\), this would be a visualization of the sampling distribution of \(b_1\). Recall we introduced the sampling distribution for the sample proportion \(\widehat{p}\) of a shovel’s balls that are red in Section 8.3.2. Further recall that the standard deviation of the sampling distribution of \(b_1\) has a special name: the standard error.

This value quantifies how much variation in the fitted slope \(b_1\) one would expect from sample-to-sample. So in our case, we can expect about 0.016 units of deviation on the bty_avg slope variable. Recall that estimate and std_error play a key role in helping us to make an educated guess about possible values for the unknown population slope \(\beta_1\) relating to all instructors in our population

11.2.2 Test statistic

The fourth column of the regression table in Table 11.1 statistic corresponds to a test statistic relating to the following hypothesis test:

\[ \begin{aligned} H_0 &: \beta_1 = 0\\ \text{vs } H_A&: \beta_1 \neq 0 \end{aligned} \]

Recall our definitions of hypothesis tests and test statistics we introduced in Section 10.2:

A hypothesis test consists of a test between two competing hypotheses: 1) a null hypothesis \(H_0\) versus 2) an alternative hypothesis \(H_A\).

A test statistic is point estimate/sample statistic formula used for hypothesis testing.

Here our null hypothesis \(H_0\) assumes that the population slope \(\beta_1\) of the relationship between teaching and beauty score for all instructors in our population is 0. If the population slope \(\beta_1\) is truly 0, then this is equivalent to saying that there is no relationship between teaching and beauty score. In other words, \(x\) = beauty score would have no associated effect on \(y\) = teaching score. The alternative hypothesis \(H_A\) on the other hand assumes that population slope \(\beta_1\) is not 0, thus meaning it could be either positive or negative. Recall we called such alternative hypotheses two-sided. By convention, all hypothesis testing for regression assumes two-sided alternatives.

So much in the same vein as the “hypothesized universe” of no gender discrimination we assumed in our promotions activity in Section 10.1, when conducting this hypothesis test we’ll assume a “hypothesized universe” where there is no relationship between teaching and beauty scores. In other words, we’ll assume the null hypothesis \(H_0: \beta_1 = 0\) is true.

The statistic column in the regression table is a tricky one however. It corresponds to a “standardized statistic” as we saw in Subsection 10.6.1 where we computed a two-sample \(t\) statistic where the null distribution is a \(t\)-distribution. This is a tricky statistic for individuals new to statistical inference to study, so we’ll jump into interpreting the p-value in the next section. If you’re curious however, we’ve included a discussion of how this value is computed in Section 11.2.5.

11.2.3 p-value

The fifth column of the regression table in Table 11.1 p-value corresponds to the p-value of the above hypothesis test \(H_0: \beta_1 = 0\) versus \(H_A: \beta_1 \neq 0\). Recall our definition of a p-value we introduced in Section 10.2:

A p-value is the probability of obtaining a test statistic just as extreme or more extreme than the observed test statistic assuming the null hypothesis \(H_0\) is true

You can intuitively think of the p-value as quantifying how extreme the observed fitted slope of \(b_1\) = 0.067 is in a “hypothesized universe” where is there is no relationship between teaching and beauty scores. Since in this case the p-value is 0, following the hypothesis testing procedure we outlined in Section 10.4, for any choice of significance level \(\alpha\) we would reject \(H_0\) in favor of \(H_A\). Using non-statistical language, this is saying: we reject the hypothesis that there is no relationship between teaching and beauty scores in favor of the hypothesis that that is. In other words, the evidence suggests there is a significant relationship, one that is positive.

More precisely however, the p-value corresponds to how extreme the observed test statistic of 4.09 is when compared to the appropriate null distribution. We’ll perform a simulation using the infer package in Section 11.4. An extra caveat here is that this hypothesis test is only valid if certain “conditions for inference for regression” are met, which we’ll introduce shortly in Section 11.3.

11.2.4 Confidence interval

The two right-most columns of the regression table in Table 11.1 lower_ci and upper_ci correspond to the endpoints of the 95% confidence interval for the population slope \(\beta_1\). Recall our analogy of “nets are to fish” what “confidence intervals are to population parameters” from Section 9.3. The resulting 95% confidence interval for \(\beta_1\) of (0.035, 0.099) is a range of plausible values for the population slope \(\beta_1\) of the linear relationship between teaching and beauty score.

As we discussed in Section 9.5.2 on the precise and shorthand interpretation of confidence intervals, the statistically precise interpretation of this confidence interval is: “if we repeated this sampling procedure a large number of times, we expect about 95% of the resulting confidence intervals to capture the value of the population slope \(\beta_1\).” However, we’ll summarize this using our shorthand interpretation that “we’re 95%”confident" that the true population slope \(\beta_1\) lies between 0.035 and 0.099."

Notice in this case that the resulting 95% confidence interval for \(\beta_1\) of (0.035, 0.099) does not contain a very particular value: \(\beta_1\) = 0. Recall from the earlier Subsection 11.2.2 that if the population regression slope \(\beta_1\) is 0, this is equivalent to saying there is no relationship between teaching and beauty scores. Since \(\beta_1\) = 0 is not in our plausible range of values for \(\beta_1\), we are inclined to believe that there is in fact a relationship between teaching and beauty scores.

So in this case, the inferential conclusion about the population slope \(\beta_1\) obtained from the 95% confidence interval matches the conclusion reached from the hypothesis test above: the evidence suggests there is a meaningful relationship between teaching and beauty scores!

Recall however from Subsection 9.5.3 that the confidence level is one the many factors that determine confidence interval widths. So for example, say we used a higher confidence level of 99%, the resulting confidence intervals would be wider, and thus the resulting 99% confidence interval for \(\beta_1\) might include 0. The lesson is to remember that any confidence interval based conclusion depends highly on the confidence level used.

What are the calculations that went into computing the two endpoints of the 95% confidence interval for \(\beta_1\)? Recall our sampling bowl example from Section 9.7.2. Since the sampling/bootstrap distribution of the sample proportion \(\widehat{p}\) was bell-shaped, we could use the rule of thumb for bell-shaped distributions to create a 95% confidence interval for \(p\) with the following equation:

\[\widehat{p} \pm \text{MoE}_{\widehat{p}} = \widehat{p} \pm 1.96 \cdot \text{SE}_{\widehat{p}} = \widehat{p} \pm 1.96 \cdot \sqrt{\frac{\widehat{p}(1-\widehat{p})}{n}}\]

We can generalize this to other point estimates that have bell-shaped sampling/bootstrap distributions:

\[\text{point estimate} \pm \text{MoE} = \text{point estimate} \pm 1.96 \cdot \text{SE}\]

We’ll show in Section 11.4 that the sampling/bootstrap distribution for the fitted slope \(b_1\) is in fact bell-shaped as well. Thus we can construct a 95% confidence interval for \(\beta_1\) with the following equation:

\[b_1 \pm \text{MoE}_{b_1} = b_1 \pm 1.96 \cdot \text{SE}_{b_1}\]

What are the values of the standard error \(\text{SE}_{b_1}\)? Recall from the earlier Subsection 11.2.1 that they are in fact in the third column of the regression table. Thus the

\[ \begin{aligned} b_1 \pm 1.96 \cdot \text{SE}_{b_1} &= 0.067 \pm 1.96 \cdot 0.016 = 0.067 \pm 0.031\\ &= (0.036, 0.098) \end{aligned} \]

Much like hypothesis tests however, this confidence interval also only yields valid if the “conditions for inference for regression” discussed in Section 11.3 are met.

11.2.5 How does R compute the table?

Since we didn’t do any bootstrapping or simulation-based procedures to get the values of the standard error, test statistic, p-value, and endpoints of the 95% confidence interval in Table 11.1, you might be wondering how were these values computed? What did R do behind the scenes? Does R run simulations like we’ve been doing using the rep_sample_n() function and the infer package as we’ve been doing in Chapters 8 on sampling, 9 on confidence intervals, and 10?

The answer is no! Much like the theory-based method for constructing confidence intervals you saw in Section 9.7.2 and the theory-based hypothesis test you saw in Section 10.6.1, there are actually mathematical formulas that allow you to construct confidence intervals and conduct hypothesis test for inference for regression. These formulas were derived in a time when computers didn’t exist, so running extensive simulations as we’ve been doing in this book would’ve been impossible.

In particular there is a formula for the standard error of the fitted slope \(b_1\):

\[\text{SE}_{b_1} = \dfrac{\dfrac{s_y}{s_x} \cdot \sqrt{1-r^2}}{\sqrt{n-2}}\]

As with many formulas in statistics, there’s a lot going on here so let’s first break down what each symbol represents: 1) \(s_x\) and \(s_y\) are the sample standard deviations for the explanatory variable bty_avg and the response variable score respectively. 2) \(r\) is the sample correlation coefficient between score and bty_avg. This was computed as 0.187 in Chapter 6. 3) \(n\) is the number of pairs of points in the evals_ch6 data frame, here 463.

To put the relationship into words, the standard error of \(b_1\) depends on the relationship between how the response variable varies and the explanatory variable varies in the \(s_y / s_x\) term. Next it looks into the relationship of how the two variables relate to each other in the \(\sqrt{1-r^2}\) term.

However, the most important observation to make in the above formula is that there is a \(n - 2\) in the denominator. In other words, as the sample size \(n\) increases, the standard error \(\text{SE}_{b_1}\) decreases. Just as we demonstrated in Section 8.3.3 when we used shovels with \(n\) = 25, 50, and 100, the amount of sample-to-sample variation in the fitted slope \(b_1\) will depend on the sample size \(n\). In particular, as the sample size increases, the sampling/bootstrap distribution narrows i.e. the standard error \(\text{SE}_{b_1}\) goes down. Hence our estimates \(b_1\) of the true population slope \(\beta_1\) get more and more precise.

R then uses this formula for the standard error of \(b_1\) to fill in the third column of the regression table and subsequently to construct 95% confidence intervals. What about the hypothesis test? Much like in our theory-based hypothesis test in Section 10.6.1, R uses the following \(t\)-statistic as the test statistic for hypothesis testing:

\[ t = \dfrac{ b_1 - \beta_1}{ \text{SE}_{b_1}} \]

And since the null hypothesis \(H_0: \beta_1 = 0\) is assumed during the hypothesis test, the \(t\)-statistic becomes

\[ t = \dfrac{ b_1 - 0}{ \text{SE}_{b_1}} = \dfrac{ b_1 }{ \text{SE}_{b_1}} \]

What are the values of \(b_1\) and \(\text{SE}_{b_1}\)? They are in the second and third column of the regression table in Table 11.1. Thus the value of 4.09 in the table is computed as 0.067/0.016 = 4.188. Note there is a slight difference due to rounding error.

Lastly, to compute the p-value, you need to compare to observed test statistic of 4.09 to the appropriate null distribution: the sampling distribution of the above \(t\) statistic assuming that \(H_0\) is true. Much like in Section 10.6.1, it can be mathematically proven that this distribution is a \(t\)-distribution with degrees of freedom equal to \(df\) = n-2 = 463-2 = 461.

Don’t worry if you’re feeling a little overwhelmed at this point. There is a lot background theory to understand before you can fully make sense of the equations for theory-based methods. That being said, theory-based methods and simulation-based methods for constructing confidence intervals and conducting hypothesis tests often yield consistent results.

In our opinion, a large benefit of simulation-based methods over theory-based is that they are easier for people new to statistical inference to understand. We’ll replicate the above analysis using a simulation-based approach with the infer package in Section 11.4. In particular, we’ll convince you that the sampling/bootstrap distribution of the fitted slope \(b_1\) is indeed bell-shaped.

11.3 Conditions for inference for regression

Similarly to Section 9.3.2 where we could only use the standard-error based method for constructing confidence intervals if the bootstrap distribution was bell shaped, there are certain conditions that need to be met in order for our hypothesis tests and confidence intervals we described in Section 11.2 above to have valid meaning. These conditions must be met for the underlying mathematical and probability theory to work.

For inference for regression, there are four conditions that need to be met. Note the first four letters of these conditions as highlighted in bold below: LINE. This can serve as a nice reminder of what to check whenever linear regression is performed.

- Linearity of relationship between variables

- Independence of residuals

- Normality of residuals

- Equality of variance

Conditions L, N, and E can be verified through what is known as a residual analysis. Condition I can only be verified through an understanding of how the data was collected. Before we start verifying these conditions, let’s go over a refresher of the concept of residuals.

11.3.1 Residuals refresher

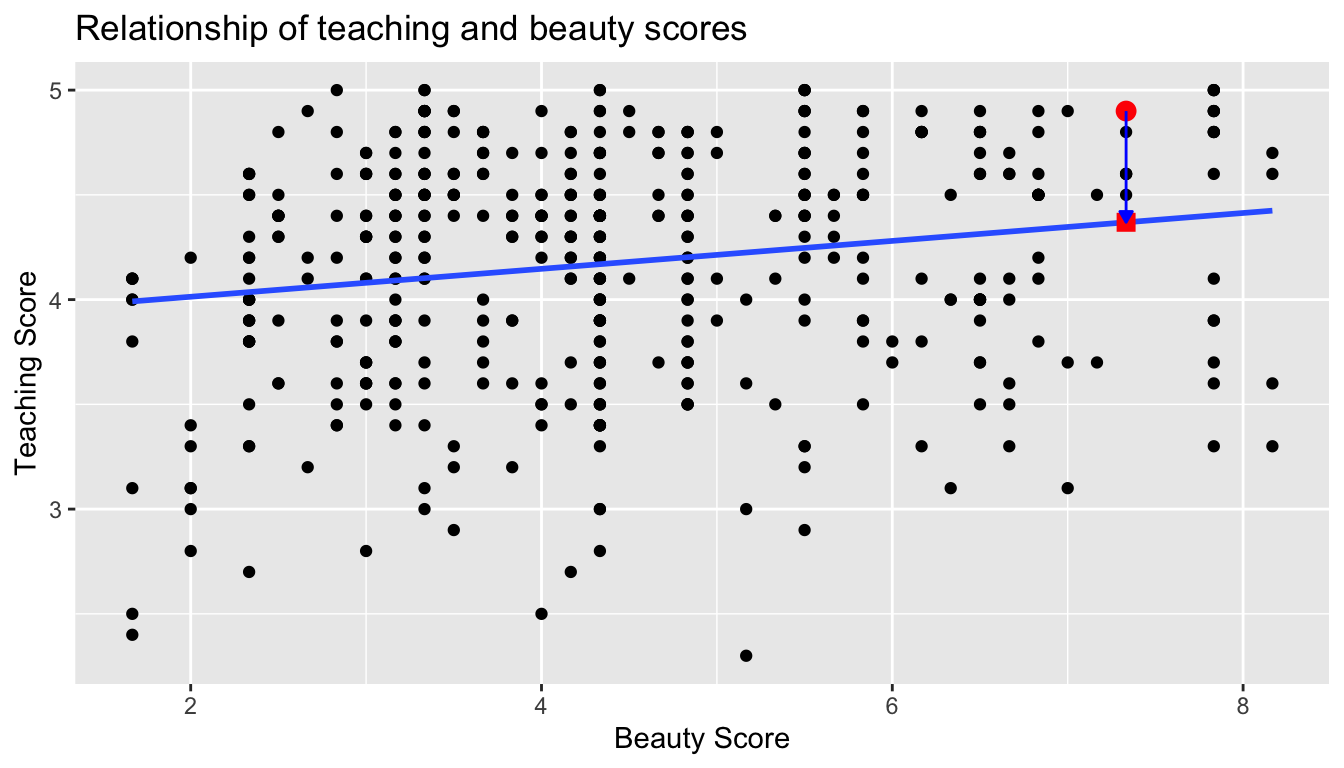

Recall our definition of a residual from Section 6.1.3: it is the observed value minus the fitted value \(y - \widehat{y}\). Recall that residuals can be thought of as the error or the “lack-of-fit” between the observed value \(y\) and the fitted value \(\widehat{y}\) on the blue regression line in Figure 11.1. We illustrate one particular residual out of 463 in Figure 11.2.

FIGURE 11.2: Example of observed value, fitted value, and residual.

Furthermore, we can automate the calculation of all \(n\) = 463 residuals by applying the get_regression_points() function to score_model, which has our regression model saved in it. Observe how the resulting values of residual are roughly equal to score - score_hat (there is a slight difference due to rounding error).

# Fit regression model:

score_model <- lm(score ~ bty_avg, data = evals_ch6)

# Get regression points:

regression_points <- get_regression_points(score_model)

regression_points# A tibble: 463 x 5

ID score bty_avg score_hat residual

<int> <dbl> <dbl> <dbl> <dbl>

1 1 4.7 5 4.214 0.486

2 2 4.100 5 4.214 -0.114

3 3 3.9 5 4.214 -0.314

4 4 4.8 5 4.214 0.586

5 5 4.600 3 4.08 0.52

6 6 4.3 3 4.08 0.22

7 7 2.8 3 4.08 -1.28

8 8 4.100 3.333 4.102 -0.002

9 9 3.4 3.333 4.102 -0.702

10 10 4.5 3.16700 4.091 0.40900

# … with 453 more rowsIdeally when we fit a regression model, we’d like there to be no systematic pattern to these residuals. We’ll be more specific as to what we mean by no systematic pattern when we see Figure 11.2, but let’s keep this notion imprecise for now. This residual analysis to verify conditions 1, 3, and 4 can be performed via appropriate data visualizations. While there are more sophisticated statistical approaches that can also be done, we’ll will focus on the simpler approaches of evaluating plots.

11.3.2 Linearity of relationship

The first condition is that the relationship between the outcome variable \(y\) and the explanatory variable \(x\) must be Linear. Recall the scatterplot in Figure 11.1. We had the explanatory variable beauty score \(x\) on the x-axis and the outcome variable teaching score \(y\) on the y-axis. Would you say that the relationship between \(x\) and \(y\) is linear? It’s hard to say, because of the scatter of the points about the line. Recall that the correlation coefficient between teaching and beauty score was 0.187, indicating a weakly positive linear relationship. In the authors’ opinions, we feel this relationship is “linear enough”.

Let’s present an example where the relationship between \(x\) and \(y\) is very clearly not linear in Figure 11.3. In this case, the points clearly do not form a line, but rather a U-shaped polynomial line. In this case, any inference for regression would not be valid.

FIGURE 11.3: Example of clearly non-linear relationship.

11.3.3 Independence of residuals

The second condition is that the residuals must be Independent. In other words, the different observations in our data must be independent of one another.

For our UT Austin data, while there is data on 463 courses, there are only 94 unique instructors. In other words, the same professor can be included more than once in our data. The original evals data frame that we used to construct the evals_ch6 data frame has a variable prof_ID of an anonymized identification variable for the professor:

evals %>%

select(ID, prof_ID, score, bty_avg)# A tibble: 463 x 4

ID prof_ID score bty_avg

<int> <int> <dbl> <dbl>

1 1 1 4.7 5

2 2 1 4.100 5

3 3 1 3.9 5

4 4 1 4.8 5

5 5 2 4.600 3

6 6 2 4.3 3

7 7 2 2.8 3

8 8 3 4.100 3.333

9 9 3 3.4 3.333

10 10 4 4.5 3.16700

# … with 453 more rowsFor example, the professor with ID equal to 1 taught the first 4 courses in the data, the professor with ID equal to 2 taught the next 3, and so on. Given that the same professor taught the first four courses, it is reasonable to expect that the teaching score for these courses are related. If a professor gets a high score in one class, chances are fairly good they’ll get a high score in another. This dataset thus provides different information than if we had 463 unique instructors teaching the 463 courses.

In this case we say there exists dependence between observations. The first four courses taught by professor 1 are dependent, the next 3 courses taught by professor 2 are related, and so on. Any proper analysis of this data needs to take into account that we have repeated measures for the same profs.

So in this case, the independence conditions is not met. What does this mean for our analysis? We’ll address this in Subsection 11.3.6 below, after we check the remaining two conditions.

11.3.4 Normality of residuals

Let’s now get a little more precise in our definition of no systematic pattern in the residuals. The third condition is that the residuals should follow a Normal distribution. Furthermore, the center of this distribution should be 0. In other words, sometimes the regression model will make positive errors: \(y - \widehat{y} > 0\). Other times, the regression model will make equally negative errors: \(y - \widehat{y} < 0\). However, on average the errors should be 0.

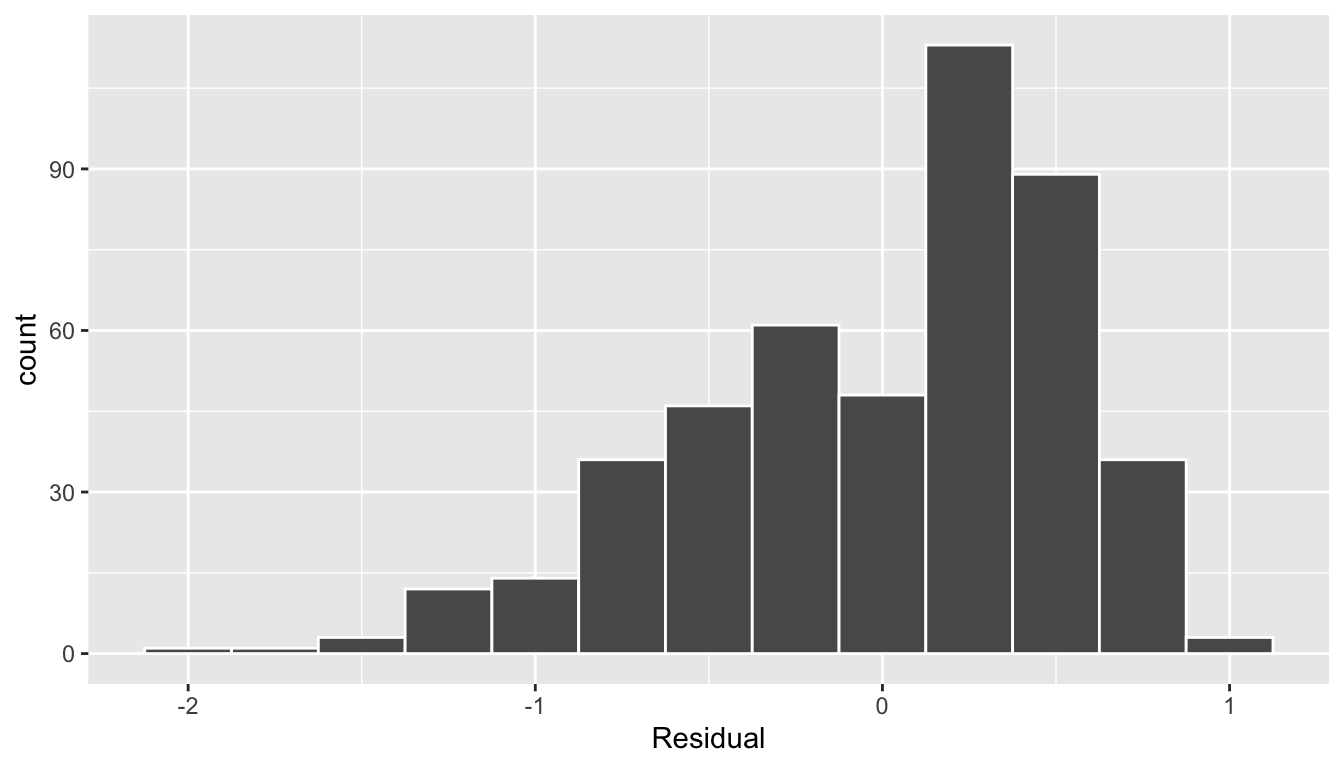

The simplest way to check for this condition of the normality of the residuals is to look at a histogram, which we visualize in Figure 11.4.

ggplot(regression_points, aes(x = residual)) +

geom_histogram(binwidth = 0.25, color = "white") +

labs(x = "Residual")

FIGURE 11.4: Histogram of residuals.

This histogram seems to indicate that we have more positive residuals than negative. Since the residual \(y-\widehat{y}\) is positive when \(y > \widehat{y}\), it seems our regression model’s fitted teaching scores \(\widehat{y}\) tend to underestimate the true teaching scores \(y\). This histogram has a slight left-skew in that there is a tail on the left. Another way to say this is this data exhibits a negative skew. Is this a problem? Again, there is a certain amount of subjectivity in the response. In the authors’ opinion, while there is a slight skew or pattern to the residuals, it isn’t drastic. On the other hand, others might disagree with our assessment.

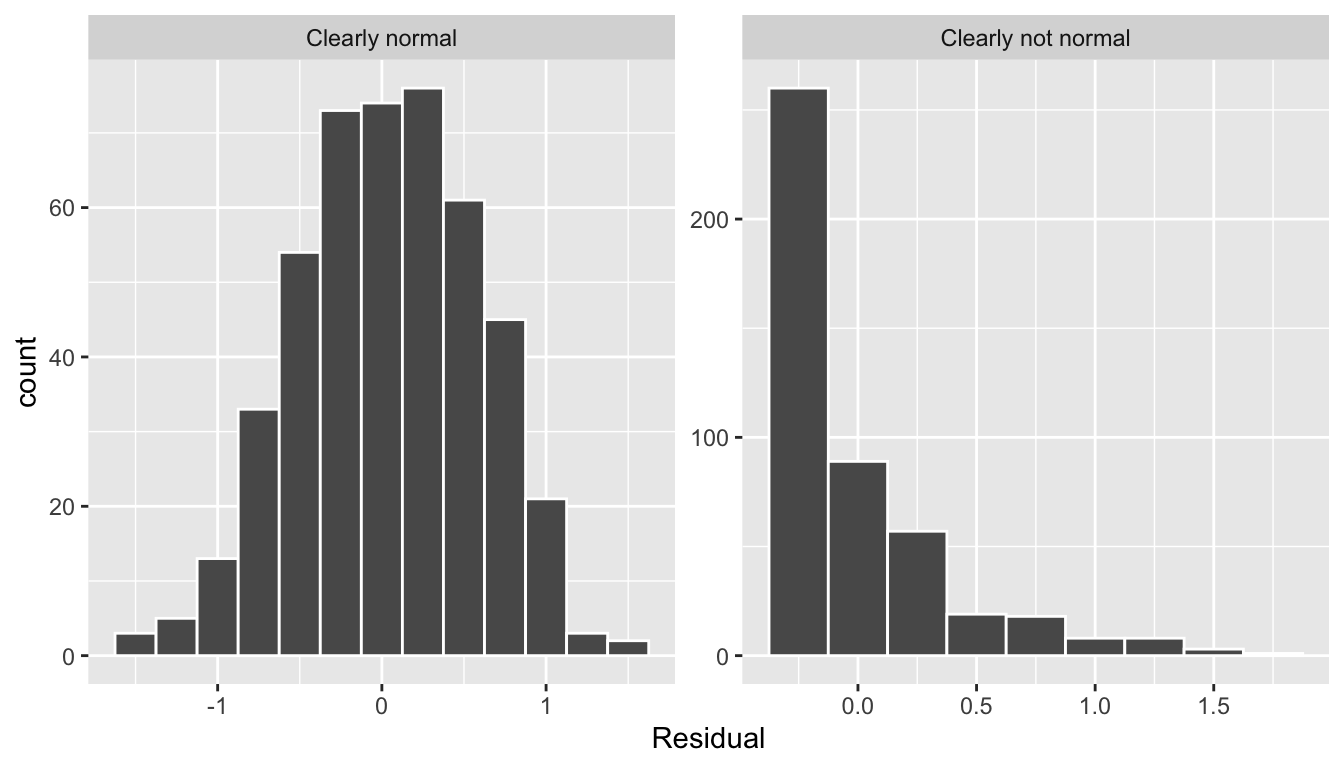

Let’s present an example where the residuals clearly follow a normal distribution and an example where they don’t in Figure 11.5. In this case of the model yielding the clearly non-normal residuals on the right, any inference for regression would not be valid.

FIGURE 11.5: Example of clearly normal and clearly non-normal residuals.

11.3.5 Equality of variance

Continuing with our ideal that there be no systematic pattern to the residuals, the fourth and final condition is that the residuals should exhibit Equal variance for across all values of \(x\). In other words, the value and spread of the residuals should not depend on the value of \(x\).

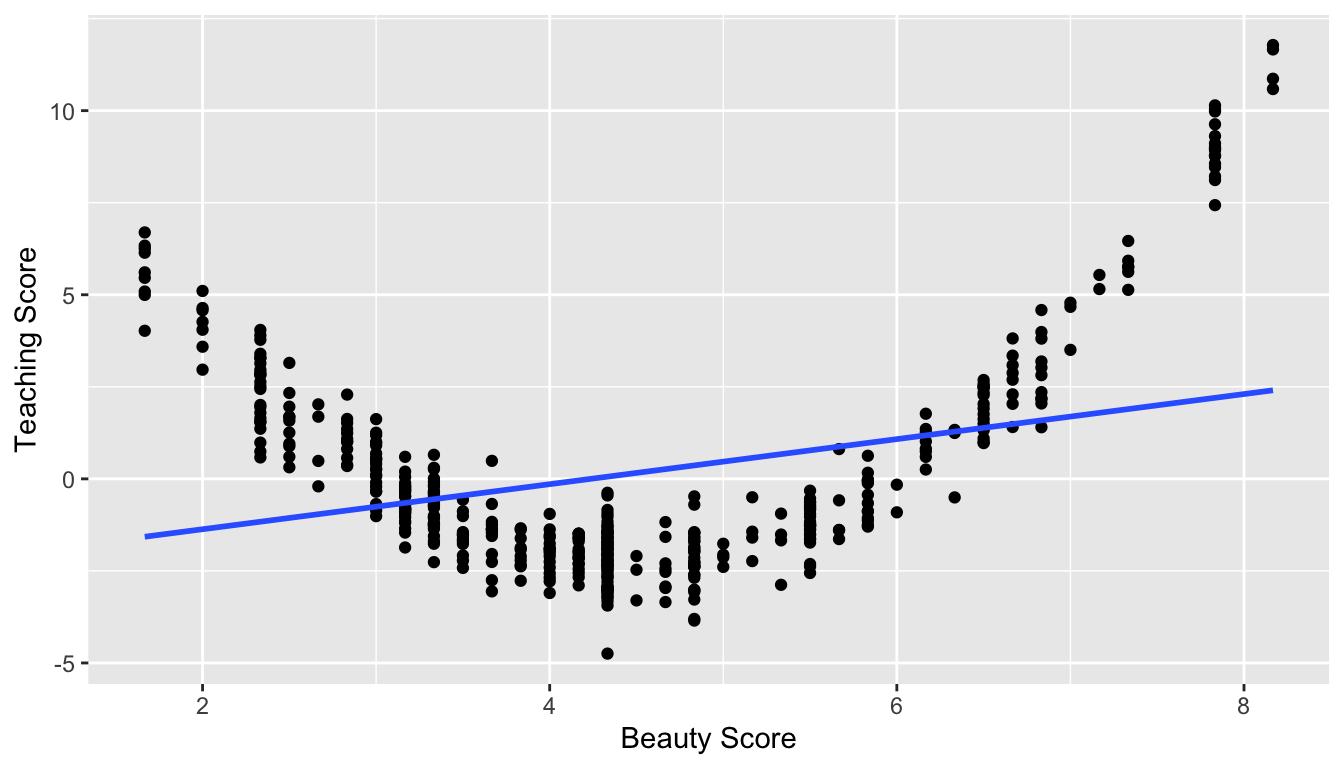

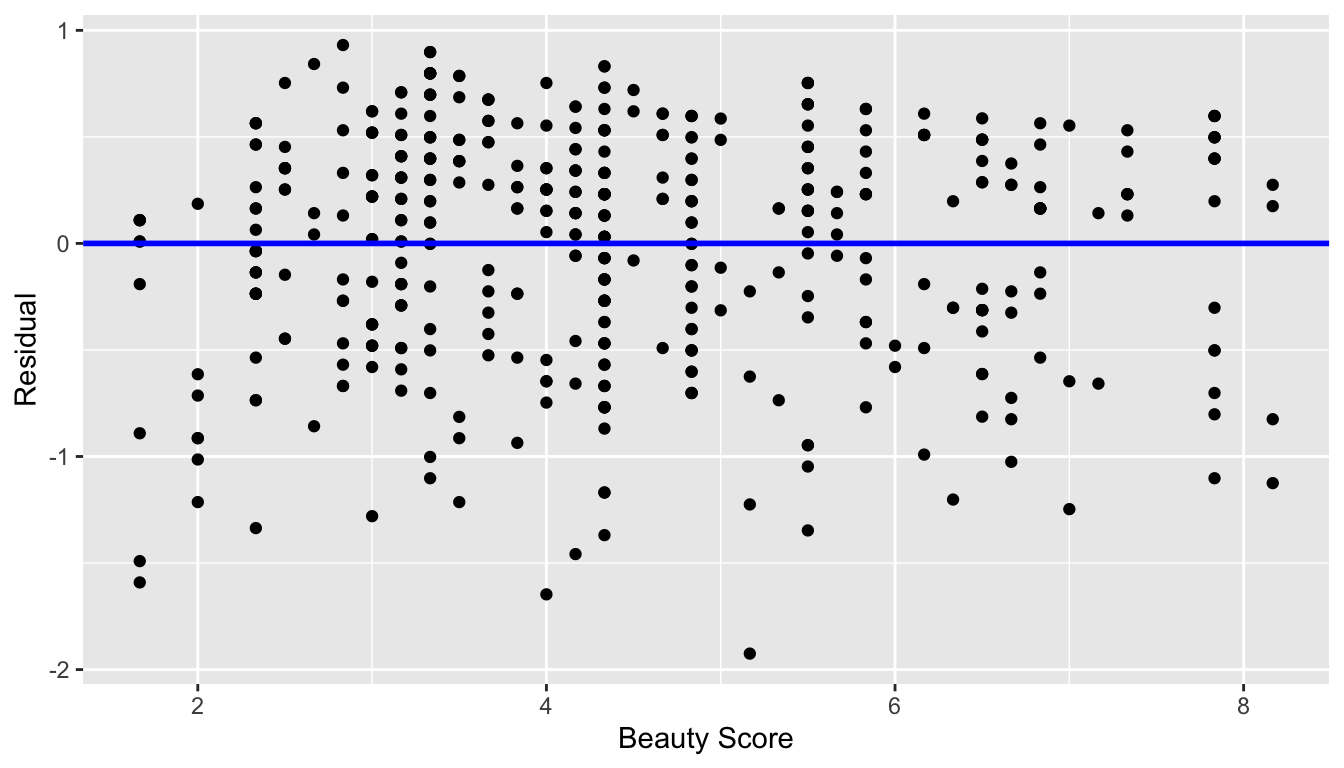

First, recall the scatterplot in Figure 11.1. We had the explanatory variable beauty score \(x\) on the x-axis and the outcome variable teaching score \(y\) on the y-axis. Instead, let’s create a scatterplot that has the same values on the x-axis, but now with the residual \(y-\widehat{y}\) on the y-axis instead in Figure 11.6 below.

ggplot(regression_points, aes(x = bty_avg, y = residual)) +

geom_point() +

labs(x = "Beauty Score", y = "Residual") +

geom_hline(yintercept = 0, col = "blue", size = 1)

FIGURE 11.6: Plot of residuals over beauty score.

You can think of this plot as a version of the plot with the regression line in blue Figure 11.1, but with the regression line flattened out to \(y=0\). Looking at this plot, would you say that the spread of the residuals around the blue line is constant? This question is rather qualitative and subjective in nature, thus different people may respond with different answers to the above question. For example, some people might say that there is slightly more variation in the residuals for smaller values of \(x\) than with for higher ones. However, it can be argued that there isn’t a drastic inequality .

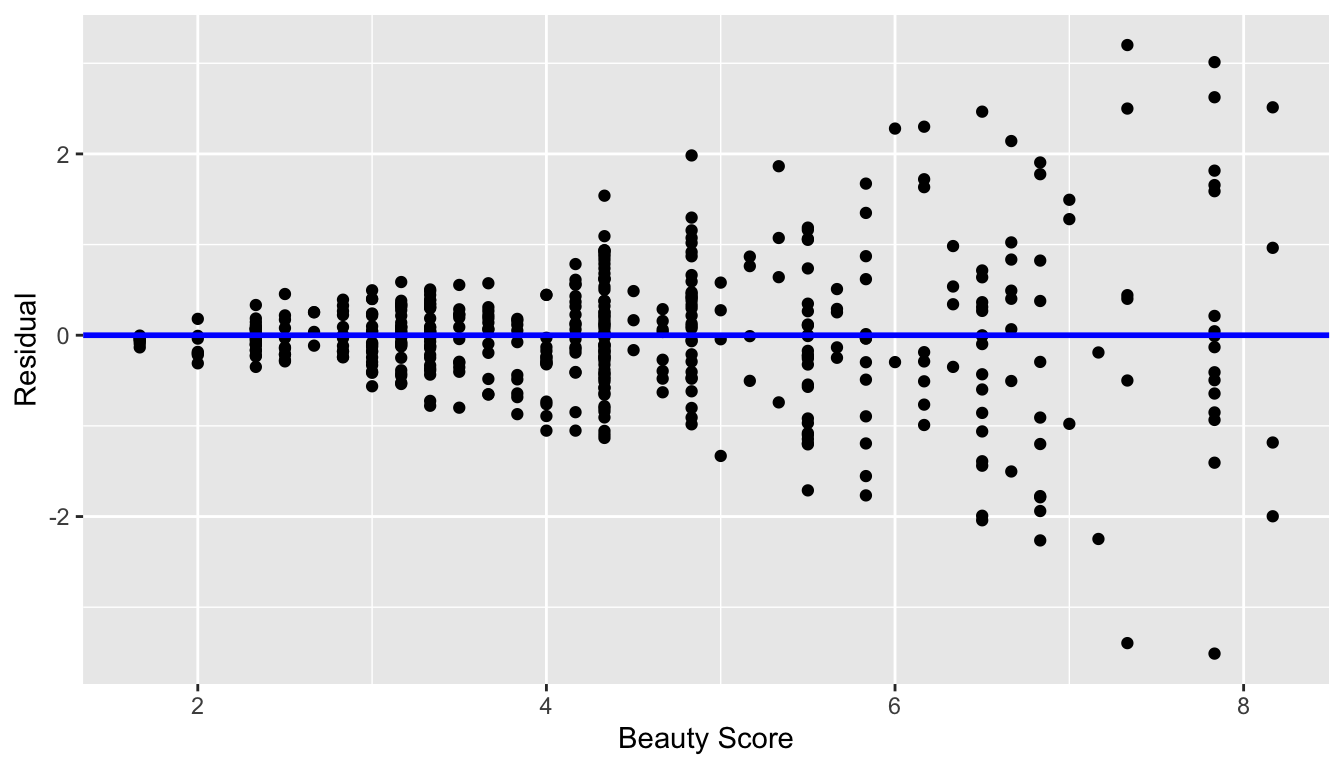

Let’s present an example where the residuals clearly do not have equal variance for all values of \(x\) in Figure 11.7. The spread of the residuals increases as the value of \(x\) increases; this situation is known as heteroskedasticity. In this case of the model yielding these residuals on the right, any inference for regression would not be valid.

FIGURE 11.7: Example of clearly non-equal variance.

Learning check

(LC11.1) Continuing with our regression using age as the explanatory variable and teaching score as the outcome variable, use the get_regression_points() function to get the observed values, fitted values, and residuals for all 463 instructors. Perform a residual analysis and look for any systematic patterns in the residuals. Ideally, there should be little to no pattern.

11.3.6 What’s the conclusion?

Let’s list our four conditions again and indicate whether or not they were satisfied in our analysis:

- Linearity of relationship between variables: Yes

- Independence of residuals: No

- Normality of residuals: Somewhat

- Equality of variance: Yes

So what does this mean for the results of our confidence intervals and hypothesis tests in Section 11.2? Our model of score ~ bty_avg should be viewed as a preliminary analysis that needs to be improved on.

First, the Independence condition. The fact that there exist dependencies between different rows in evals_ch6 must be addressed. In more advanced statistics courses, you’ll learn how incorporate the fact that there are 94 instructors teaching multiple courses. One such technique is called hierarchical AKA multilevel modeling.

Second, when conditions L, N, E are not met, it often means there is a shortcoming in our model. For example, is using only a single explanatory variable beauty score sufficient? Should we be including more information via a multiple regression model as we did in Chapter 7? Or perhaps a more sophisticated form of regression modeling, like logistic or Poisson regression is necessary?

In our case, the best we can do is view the result suggested by our confidence intervals and hypothesis testing that there is in fact a relationship between teaching and beauty scores as preliminary. However further investigation is warranted, in particular by improving the model so that the 4 conditions are met. When the 4 conditions are at least roughly met, then we can put more faith into our confidence intervals and p-values.

The conditions for inference in regression problems are a key part of regression analysis that are of vital importance to the processes of constructing confidence intervals and conducting hypothesis tests. However, it is often the case with regression analysis in the real-world that not all the conditions are completely. Furthermore, as you saw in verifying there is a level of subjectivity in the residual analyses to verify the L, N, and E conditions. So what can you do? We as authors advocate for transparency in communicating all results and letting the stakeholders of analyses know about any shortcomings of a model as needed or if the model is “good enough.”

11.4 Simulation-based inference for regression

Recall in Section 11.2.5 when we interpreted all the third through seventh columns if the regression table in Table 11.1, we stated in Subsection 11.2.5 that R doesn’t do simulations to compute these values. Rather R uses theory-based methods that involve mathematical formulas.

In this section, we’ll use the simulation-based methods you previously learned in Chapters 9 and 10 to replicate the above results. In particular, we’ll use the infer package workflow to

- Construct a 95% confidence interval for the population slope \(\beta_1\) using bootstrap resampling with replacement. We did this previously in Sections 9.4 with the

penniesdata and 9.6 with themythbusters_yawndata. - Conduct a hypothesis test of \(H_0: \beta_1 = 0\) vs \(H_A: \beta_1 \neq 1\) using permuting/shuffling. We did this previously in Sections 10.3 with the

promotionsdata and 10.5 with themovies_sampleIMDb data.

11.4.1 Confidence interval for slope

We construct the bootstrap distribution for the fitted slope \(b_1\) by following the steps below. We then use this bootstrap distribution to construct a 95% confidence interval for \(\beta_1\). Remember that these denote a range of plausible values for an unknown true population slope \(\beta_1\) quantifying the relationship between teaching score on beauty score.

specify()the variables of interest inevals_ch6with the formula:score ~ bty_avg.generate()replicates by usingbootstrapresampling with replacement from the original sample of 463 courses. We generatereps = 1000such replicates.calculate()the summary statistic of interest: the fittedslope\(b_1\)

Here, the bootstrapping with replacement is done row-by-row. Thus, the original pairs of points score and bty_avg are linked together and may be repeated with each resample.

bootstrap_distn_slope <- evals_ch6 %>%

specify(formula = score ~ bty_avg) %>%

generate(reps = 1000, type = "bootstrap") %>%

calculate(stat = "slope")bootstrap_distn_slope# A tibble: 1,000 x 2

replicate stat

<int> <dbl>

1 1 0.0651055

2 2 0.0382313

3 3 0.108056

4 4 0.0666601

5 5 0.0715932

6 6 0.0854565

7 7 0.0624868

8 8 0.0412859

9 9 0.0796269

10 10 0.0761299

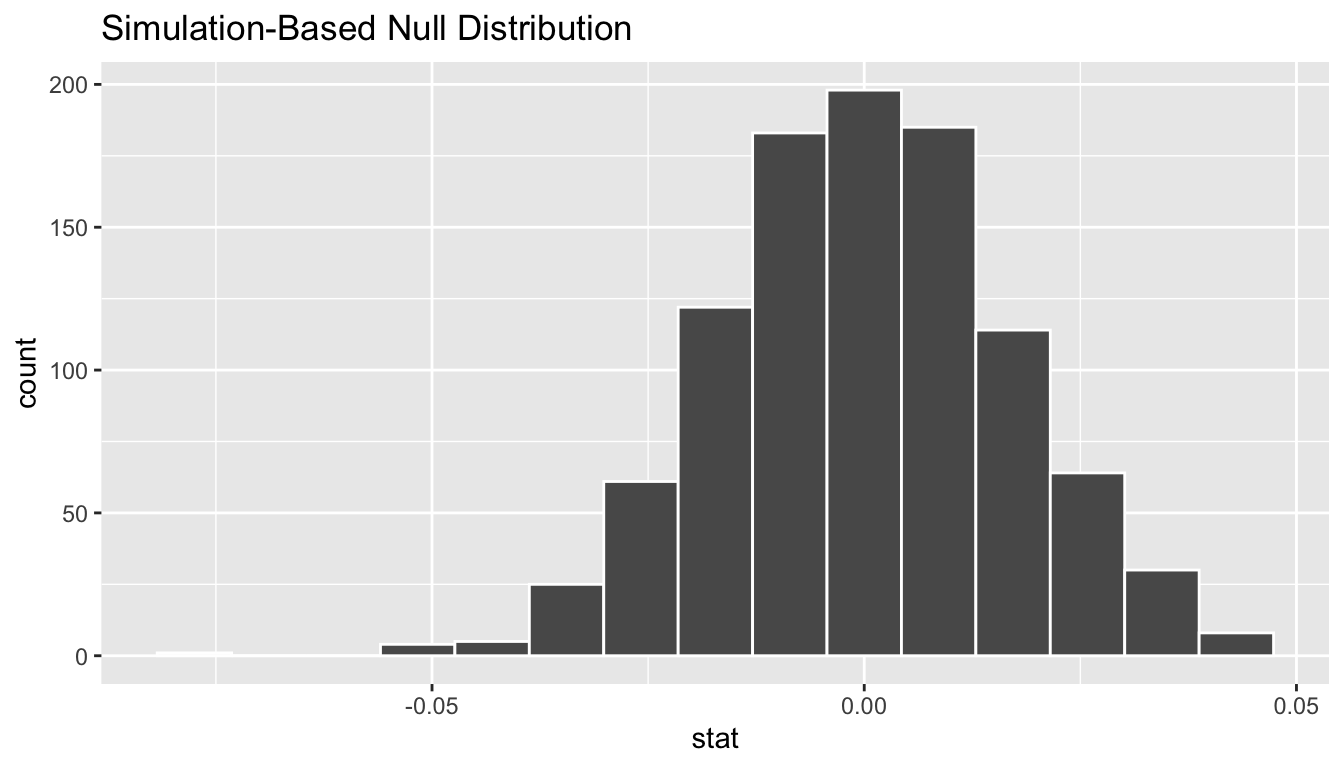

# … with 990 more rowsObserve how we have 1000 values of the bootstrapped slope \(b_1\). Let’s visualize the resulting bootstrap distribution in Figure 11.8. Recall from Section 9.7.1 that shape of the bootstrap distribution of \(b_1\) closely approximates the shape of the sampling distribution of \(b_1\), in particular the spread.

visualize(bootstrap_distn_slope)

FIGURE 11.8: Bootstrap distribution.

Percentile-method

First, let’s compute the 95% confidence interval for \(\beta_1\) using the percentile method, in other words by identifying the 2.5th and 97.5th percentiles which include the middle 95% of values. Recall that this method does not require the bootstrap distribution to be normally shaped.

percentile_ci <- bootstrap_distn_slope %>%

get_confidence_interval(type = "percentile", level = 0.95)

percentile_ci# A tibble: 1 x 2

`2.5%` `97.5%`

<dbl> <dbl>

1 0.0323411 0.0990027The resulting percentile-based 95% confidence interval for \(\beta_1\) of (0.032, 0.099) is similar to the confidence interval in the regression Table 11.1 of (0.035, 0.099).

Standard error method

Since the bootstrap distribution in Figure 11.8 appears to be roughly bell-shaped, we can also construct a 95% confidence interval for \(\beta_1\) using the standard error method. This necessitates the fitted slope \(b_1\). While we saw in the regression table in Table 11.1 that this was \(b_1\) = 0.067, we can also use the infer pipeline with the generate() step removed:

observed_slope <- evals %>%

specify(score ~ bty_avg) %>%

calculate(stat = "slope")

observed_slope# A tibble: 1 x 1

stat

<dbl>

1 0.0666370se_ci <- bootstrap_distn_slope %>%

get_ci(level = 0.95, type = "se", point_estimate = observed_slope)

se_ci# A tibble: 1 x 2

lower upper

<dbl> <dbl>

1 0.0333767 0.0998974The resulting standard error-based 95% confidence interval for \(\beta_1\) of (0.033, 0.1) is however slightly different than the confidence interval in the regression Table 11.1 of (0.035, 0.099).

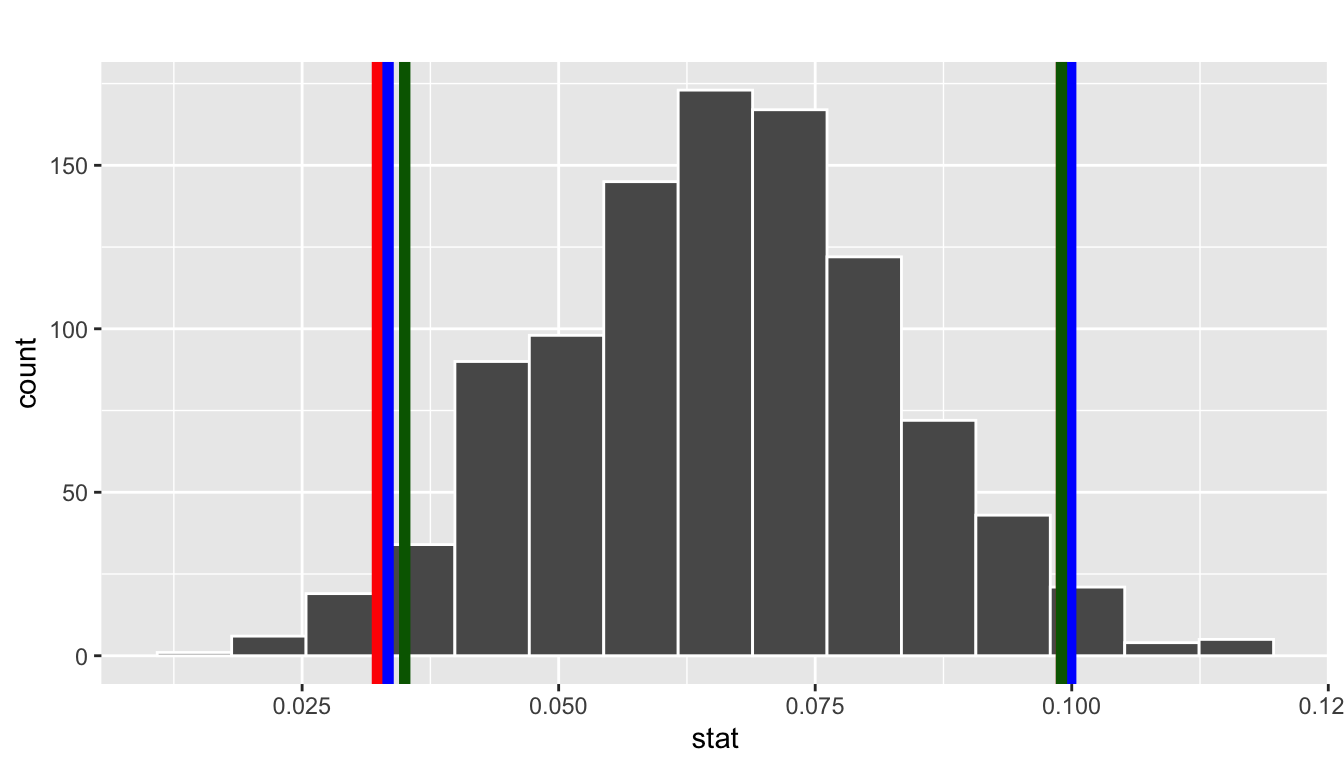

Comparing all three

Let’s compare all three confidence intervals in Figure 11.9, where the percentile-based confidence interval is in red, the standard error based confidence interval is in blue, and the theory-based confidence interval from the regression table is in green.

visualize(bootstrap_distn_slope) +

shade_confidence_interval(endpoints = percentile_ci, fill = NULL,

color = "red") +

shade_confidence_interval(endpoints = se_ci, fill = NULL,

color = "blue") +

shade_confidence_interval(endpoints = c(0.035, 0.099), fill = NULL,

color = "darkgreen")

FIGURE 11.9: Two 95 percent confidence intervals: percentile method in red, standard error method in blue.

Observe that all three are quite similar!

11.4.2 Hypothesis test for slope

We will set up this hypothesis testing process consistent with the “There is Only One Test” diagram in Figure 10.14 using the infer package. Let’s conduct a hypothesis test of \(H_0: \beta_1 = 0\) vs \(H_A: \beta_1 \neq 1\). We need to think about what it means for \(\beta_1\) to be zero as stated in the null hypothesis \(H_0\). If \(\beta_1 = 0\), we said earlier that there is no relationship between the teaching and beauty scores. Assuming this particular null hypothesis means that in our “hypothesized universe” there is no relationship between score and bty_avg and thus we can shuffle/permute the bty_avg variable to no consequence. We, therefore, have another example of shuffling/permuting of data under the null hypothesis.

We construct the null distribution of the fitted slope \(b_1\) by following the steps. Recall from Section @ref() that the null distribution is merely the sampling distribution of our test statistic \(b_1\) assuming the null hypothesis \(H_0\) is true.

specify()the variables of interest inevals_ch6with the formula:score ~ bty_avg.hypothesize()the null hypothesis ofindependence. Recall from Section 10.3 that this is a step that needs to be added for hypothesis test.generate()replicates by permuting/shuffling the explanatory variablebty_avgfrom the original sample of 463 courses. We generatereps = 1000such replicates.calculate()the test statistic of interest: the fittedslope\(b_1\)

To further reinforce the process being done in the pipeline, we’ve added the type = "permute" argument to generate(). In this case, we permuted the values of one variable across the values of the other 1000 times and calculated a "slope" coefficient for each of these 1000 generated samples.

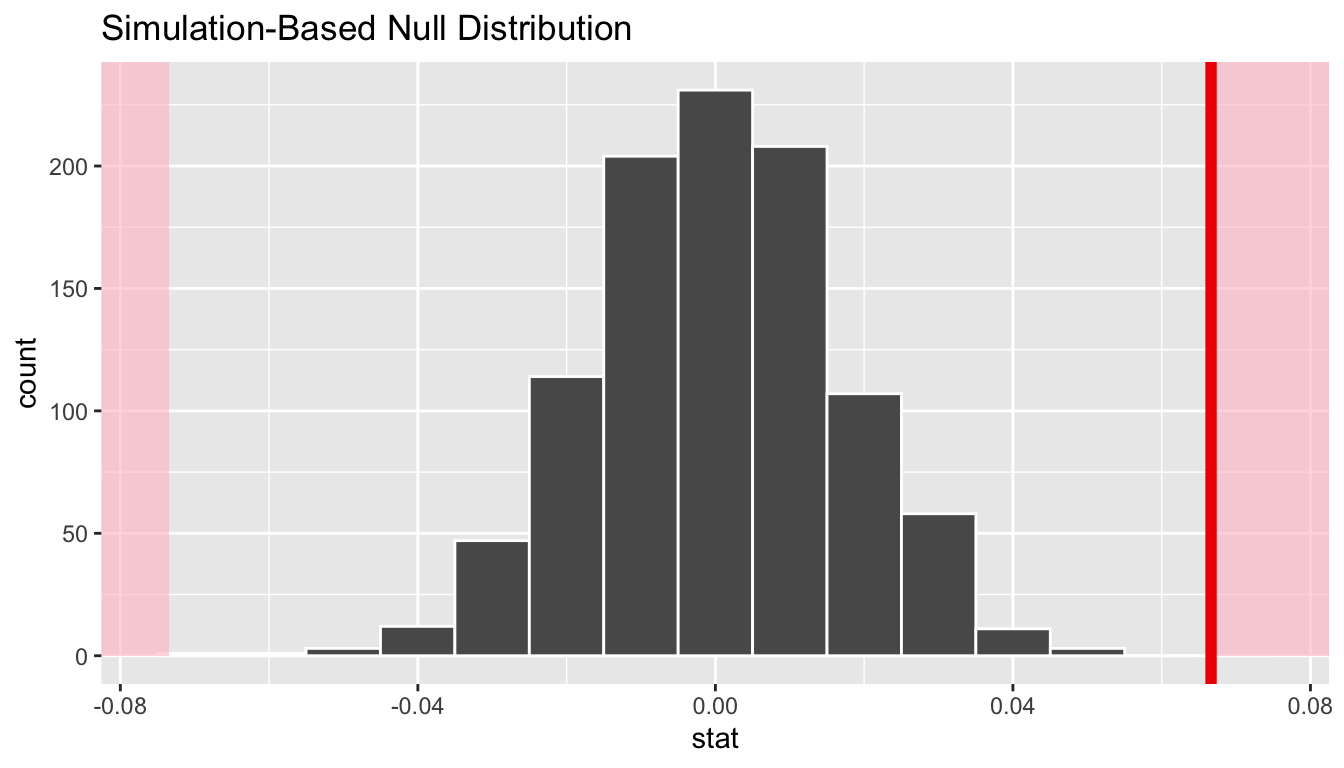

null_distn_slope <- evals %>%

specify(score ~ bty_avg) %>%

hypothesize(null = "independence") %>%

generate(reps = 1000, type = "permute") %>%

calculate(stat = "slope")Let’s visualize the resulting null distribution for the fitted slope \(b_1\) in Figure 11.10. Notice how it is centered at \(b_1\) = 0. This is because in our hypothesized universe, there is no relationship between score and bty_avg, or in other words \(\beta_1\) = 0. Thus the most typical fitted slope \(b_1\) we observe across our simulations is 0. Observe also how there is variation around this central value of 0.

visualize(null_distn_slope)

FIGURE 11.10: Null distribution.

Let’s visualize this p-value in the above null distribution by comparing it to the observed test statistic of \(b_1\) = 0.067. We’ll do this by adding a shade_p_value() layer in Figure 11.11

visualize(null_distn_slope) +

shade_p_value(obs_stat = observed_slope, direction = "both")

FIGURE 11.11: Null distribution and p-value.

Since 0.067 falls far to the right of this null distribution and doesn’t overlap it, we can say that we have a \(p\)-value of 0. We, thus, have evidence that suggests there is a relationship between the beauty score and teaching score of University of Texas faculty members. What is the numerical value of the p-value? Let’s use the get_p_value() function with the same inputs as the shade_p_value() function from above

null_distn_slope %>%

get_p_value(obs_stat = observed_slope, direction = "both")# A tibble: 1 x 1

p_value

<dbl>

1 0This matches the p-value of 0 in the regression table in Table 11.1. When the conditions for inference for regression are met and the null distribution has the characteristic bell shape, we are likely to see similar results between the simulation-based and theoretical results.

Learning check

(LC11.2) Repeat the inference above but this time for the correlation coefficient instead of the slope. Note the implementation of stat = "correlation" in the calculate() function of the infer package.

11.5 Conclusion

11.5.1 Summary

We’ve now completed our last two sampling scenarios out of six first introduced in Table 8.8 on the six scenarios of sampling for inference. Armed with the regression modeling techniques you learned in Chapters 6 and 7, your understanding of sampling for inference in Chapter 8, and tools for statistical inference like confidence intervals and hypothesis tests in Chapters 9 and 10, you’re now able to study a wide array of data!

| Scenario | Population parameter | Notation | Point estimate | Notation. |

|---|---|---|---|---|

| 1 | Population proportion | \(p\) | Sample proportion | \(\widehat{p}\) |

| 2 | Population mean | \(\mu\) | Sample mean | \(\overline{x}\) or \(\widehat{\mu}\) |

| 3 | Difference in population proportions | \(p_1 - p_2\) | Difference in sample proportions | \(\widehat{p}_1 - \widehat{p}_2\) |

| 4 | Difference in population means | \(\mu_1 - \mu_2\) | Difference in sample means | \(\overline{x}_1 - \overline{x}_2\) |

| 5 | Population regression slope | \(\beta_1\) | Fitted regression slope | \(b_1\) or \(\widehat{\beta}_1\) |

| 6 | Population regression intercept | \(\beta_0\) | Fitted regression intercept | \(b_0\) or \(\widehat{\beta}_0\) |

11.5.2 Additional resources

An R script file of all R code used in this chapter is available here.

11.5.3 What’s to come

You’ve now concluded the last major part of the book on “Statistical Inference via infer.” The closing chapter concludes with a case study on house prices in Seattle, Washington in the US. You’ll see there how the principles in this book can apply to help you be a great storyteller with data!